An application that explores how spatial computing can enhance the experience of memorizing and recalling life events.

Garden of Memories

Spatial Diary

Starting Point

Despite great technical advancements in Virtual and Mixed Reality, the potential of storing memories in a 3D space is largely untapped.

Scope

Explore different levels of abstractions for memories and transform them into tangible shapes. Develop a proof of concept application for Meta Quest 3.

Role: Concept, Research, UX, CG Design, Visualization

Outcome

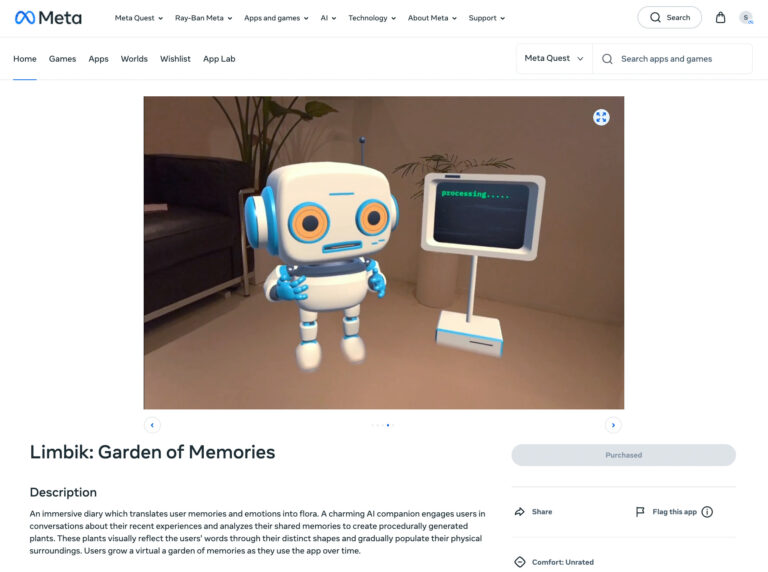

A Mixed-Reality diary application that integrates a custom Large Language Model (LLM) in order to translate user memories and emotions into plants.

Concept

While photos and videos capture life’s precious moments, they often lack the emotional depth and dimension. This project explores a new method of recording and visualizing experiences in Mixed Reality (MR) using the Meta Quest 3. The developed app reimagines diaries as personal, ever-evolving spaces for self-reflection, allowing users to relive memories on a multi-sensory level.

The app lets users record their memories through voice and thereby creates flowers that visually represent their emotions. Over time, they cultivate a virtual garden that gradually populates their physical surroundings, intertwining the digital and physical realms.

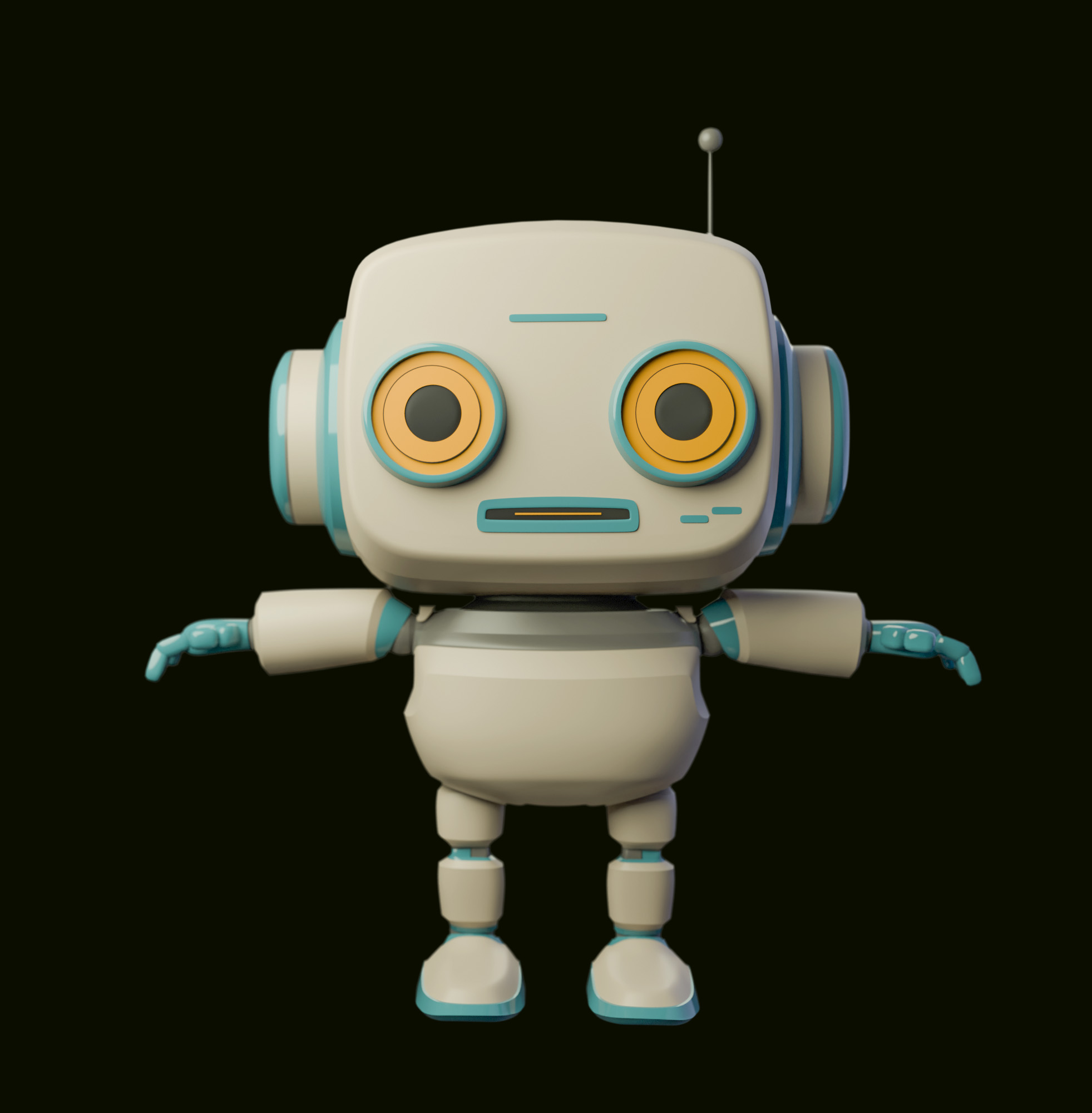

Humanoid User Interface

Robot Companion

Users interact naturally with a humanoid avatar that seamlessly handles the app’s core functions: Voice-recording memories on command, processing them into procedural plants, planting these in the Mixed Reality Space, and managing the storage of memories.

The robot companion embodies an empathetic gardener who guides users through the memory-recording process with thoughtful follow-up questions, making the experience deeply personal.

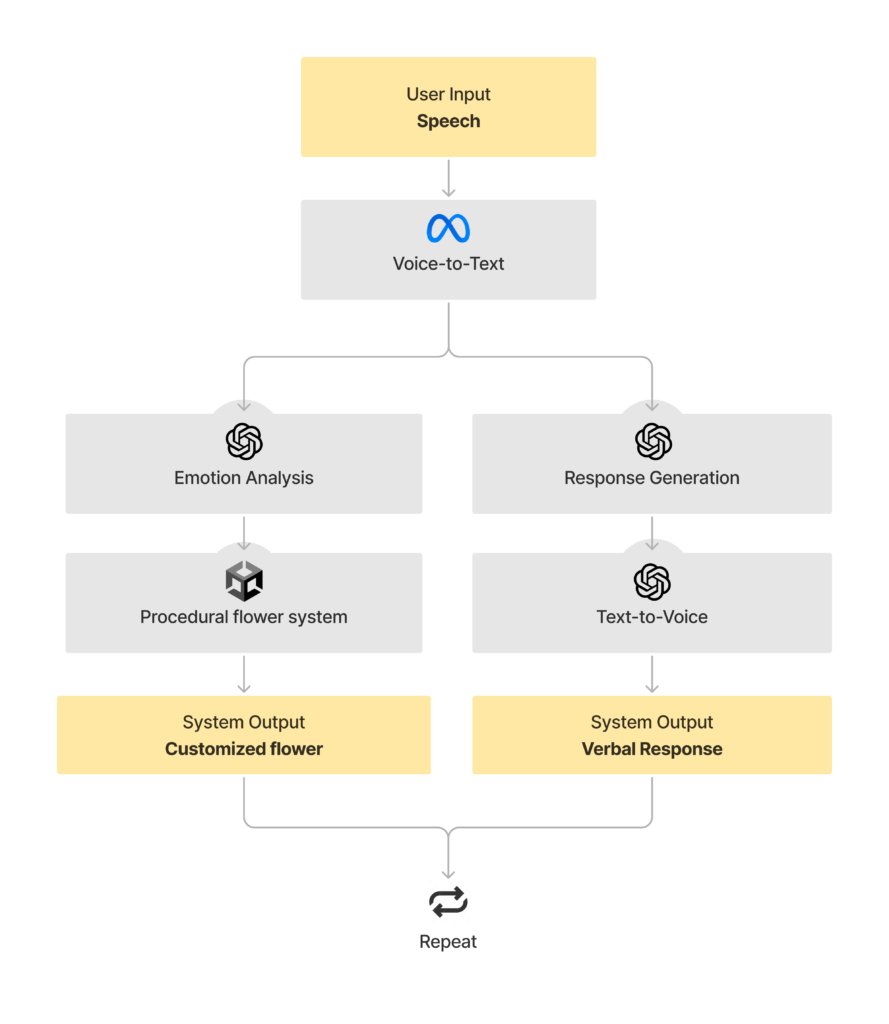

LLM Custom Assistant

Quantifying User memories for procedural plant system.

Meta Voice SDK

Voice to Text – Capturing users voice commands.

OpenAI Text to Voice

Output text prompts as audible replies.

Procedural Plant Control

Custom animation for planting flowers.

Spatial Awareness

Bot navigates room using spatial data from Quest 3.

Character Design

The avatar’s initial appearance was meticulously crafted through numerous iterations using the Generative AI platform, Midjourney. The process began with an initial shape input, followed by a series of iterative refinements. By preserving the core visual elements while subtly adjusting keywords and modifying random seeds, a diverse array of variations was generated.

personal robot assitant with spherical shape, simple, soft shapes, short legs / Job ID: af7fbe84-6244-417b-bac5-…

cute personal robot, inspired by the shape of a Japanese toy, simple, soft shapes, pink / Job ID: 8a6c1dd5-56bf-4348-b55b-…

personal robot, inspired by the shape of an n64, simple, soft shapes / Job ID: 10a383f4-cf21-40c6-b869-…

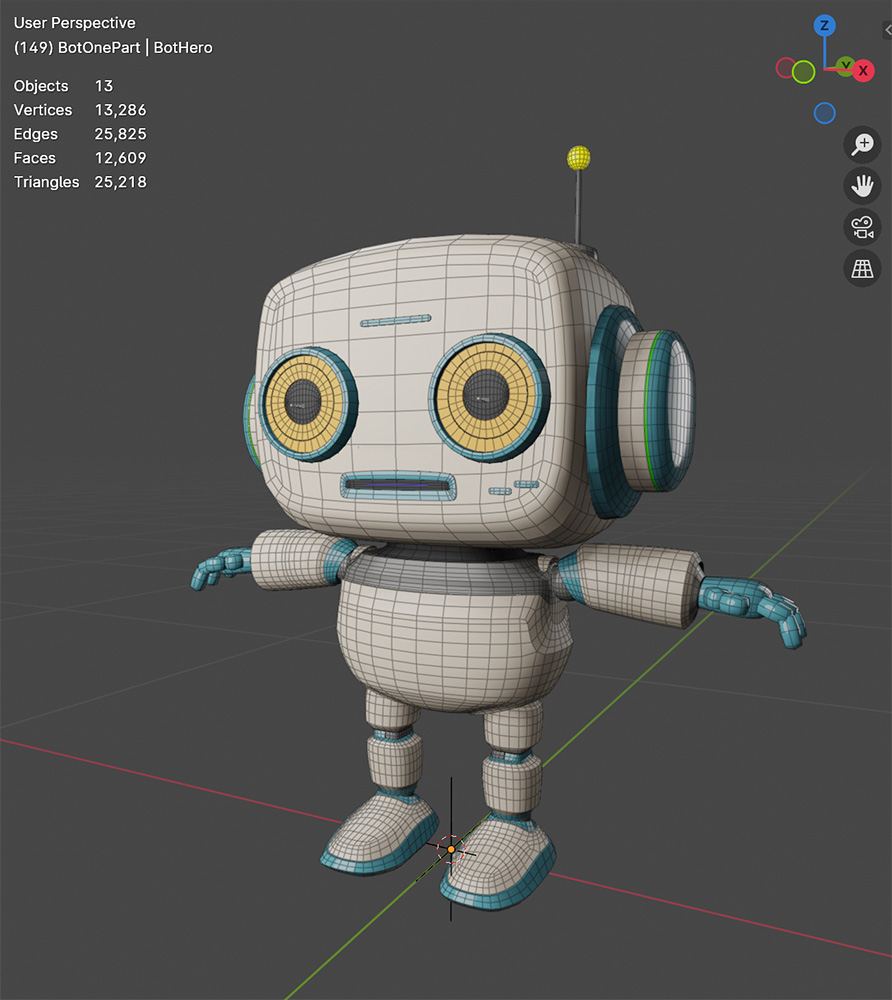

Custom Asset Creation

Character modelling and rigging

The robot companion is designed- and created from scratch for the application. Modelling as well as humanoid rigging was executed in Blender 3D and optimized for usage in the Unity Realtime Engine.

Plant Cultivation System

Sentiment Quantification

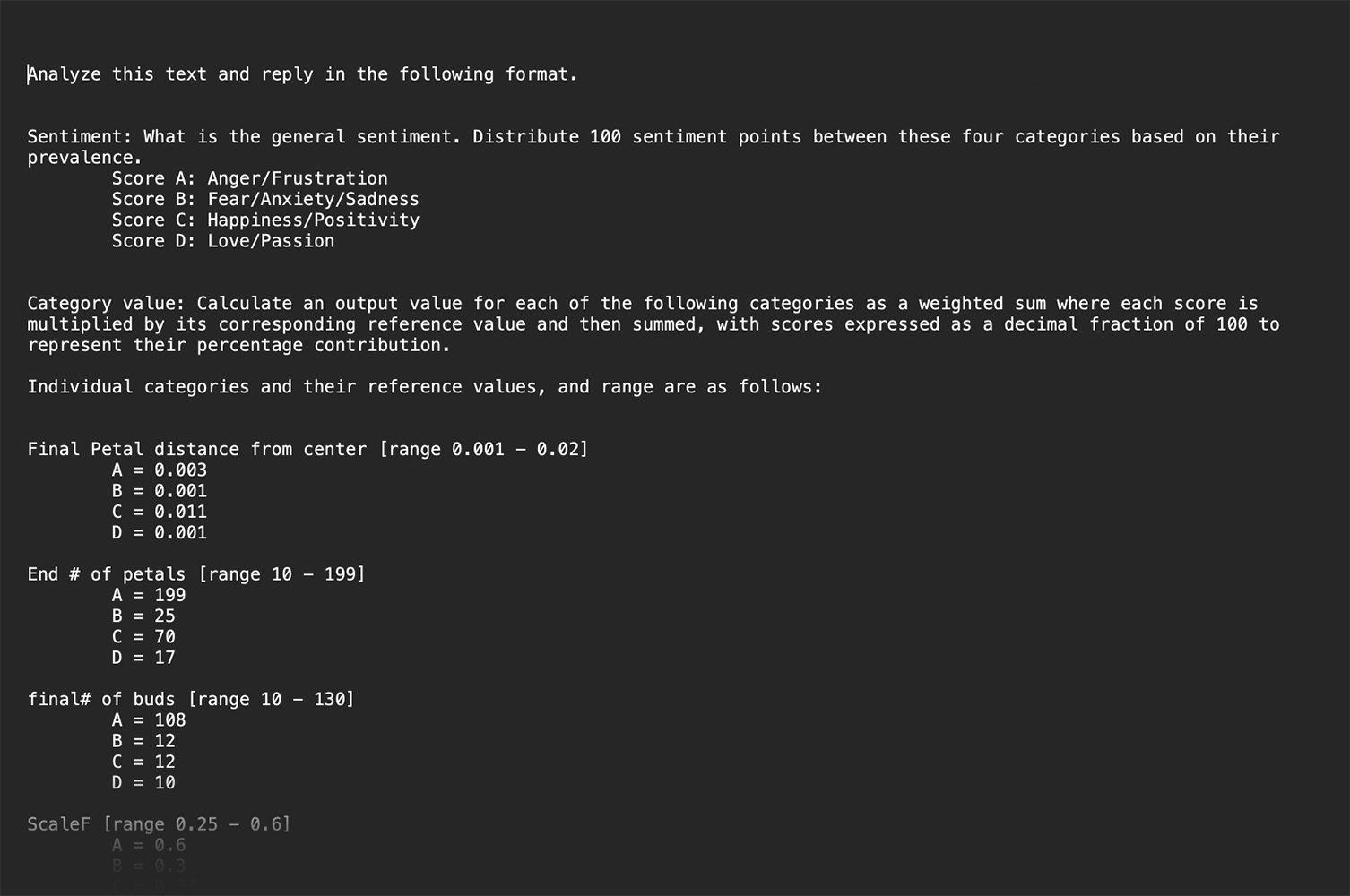

A custom system analyzes the sentiment of the user’s memories from their voice input and generates procedural plants. It interpolates between a predefined appearance of plants that each represent an emotion.

Emotion Analysis & Quantification

LLM Assistant

Custom Prompt

The Large Language Model (LLM) Assistant is fed with the user input in text form plus a prompt. The prompt instructs the LLM to analyze the user input in order to distribute 100 sentiment points between four emotion categories, of which each has a flower draft.

Procedural Flower System

Plant Presets

Unity Realtime Engine

Procedural Flower System

The output of the LLM is an interpolation between the procedural values of the four pre-set flowers. The system uses weighted sums based on the estimated sentiment scores and formats the result as a JSON string that is fed into the procedural flower system in the Unity Realtime Engine.

Outcome

Meta Quest 3

Demo Application

The project result was a native application for Meta Quest 3, which is released via Meta the Quest AppLab.

The application was submitted to the Meta Quest Presence Platform Hackathon and gained traction within the Meta, resulting in me and my teammate being invited to the Oculus Developer Program.

Research Topic

Memory consolidation

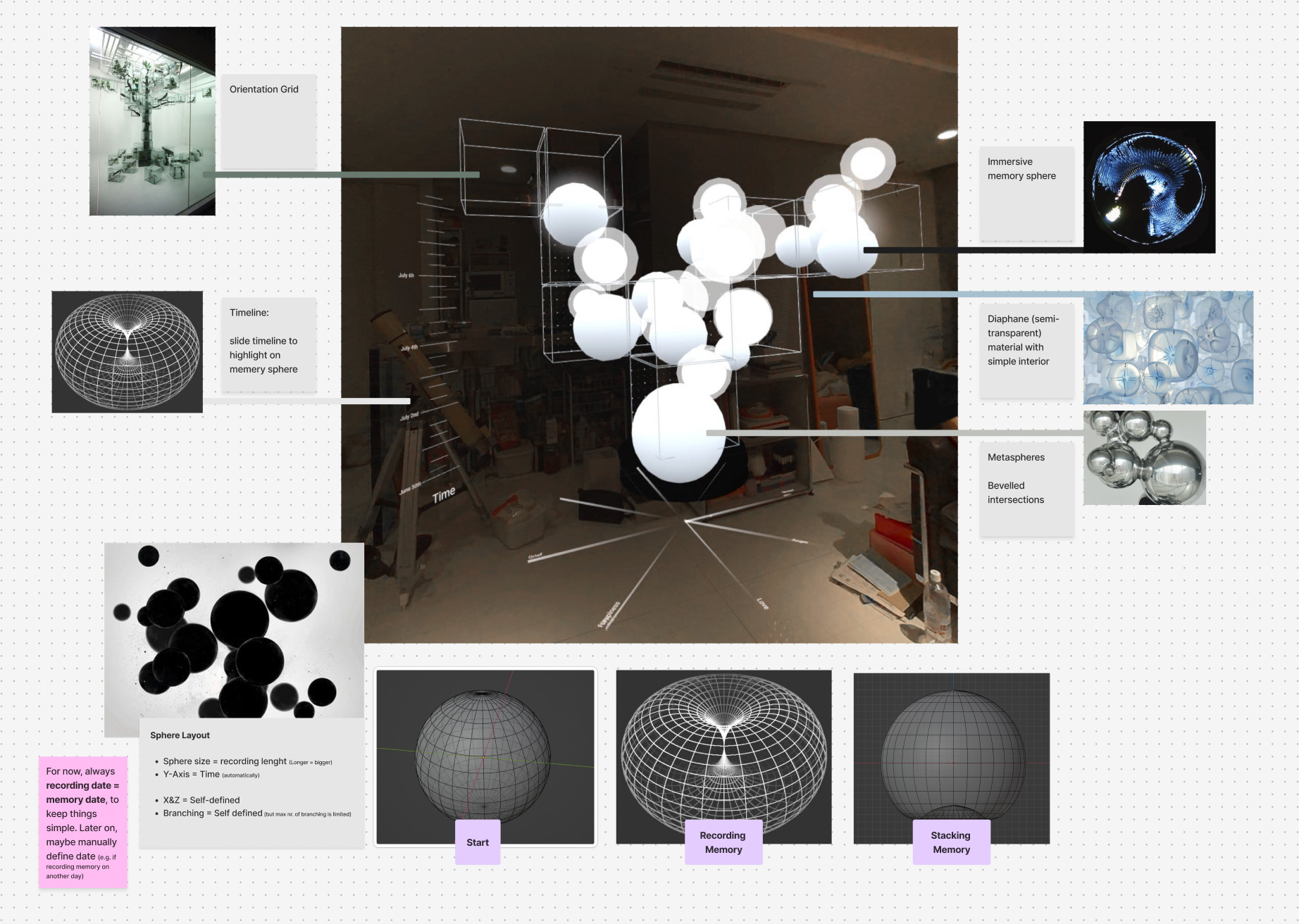

This project highlighted the need to explore novel ways for storing and recalling sensory experiences in virtual spaces.

My teammate and I are actively developing experiments in Extended Reality, including an upcoming Meta Quest app that enables users to record memories into spheres, stacking them into an abstract, sculptural tree.

Software Development Studio

The successful collaboration during this project between myself and a seasoned VR engineer laid the groundwork for the founding of Limbik, our software development studio. Our shared vision and expertise in Extended Reality inspires us to continue exploring innovative solutions.

Limbik focuses on crafting immersive VR/AR experiences that push the boundaries of interactive technology for platforms such as Apple Vision, Meta Quest and beyond.

Takeaways

Due to time constraints and limited resources, sentiment analysis was restricted to voice input from the device’s microphone, converted into text. In future projects, we aim to include tonality to extract richer information and gain a deeper understanding of user sentiment.

Current AR and VR devices collect vast amounts of data, offering new possibilities for deeper Human-Computer Interaction. This includes gestures, facial expressions, biometric data, and advanced environment sensing. With a focus on responsible data privacy, we aim to explore how this data can enhance the experience of storing and revisiting memories.